Speech Recognition

Human Voice

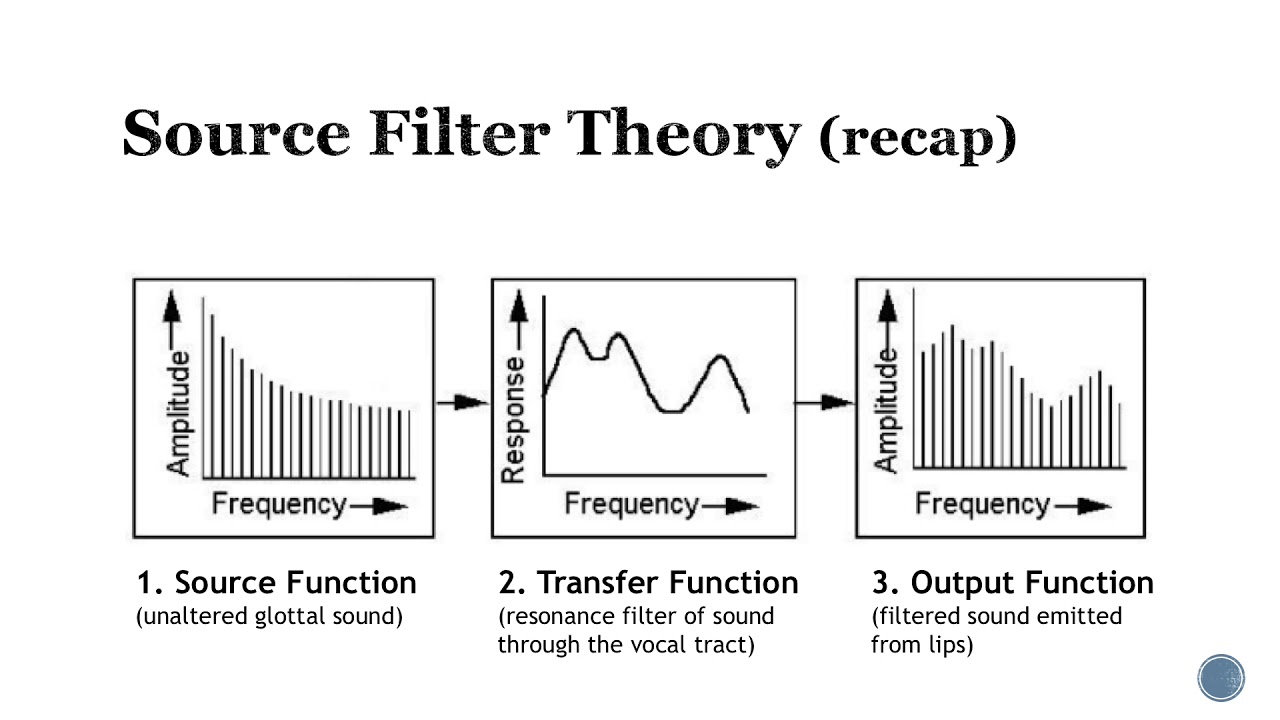

Voice Production

In humans, the sound is produced by the larynx which is also known as the the voice box.

Source Filter Theory

Types of sounds

Voiced and Voiceless sounds

/b/ is an example of voiced, you feel the vibration /th/ is an example of voiceless, no vibration

Sound wave frequencies, fundamental frequency of 125 Hz for men and 210 Hz for women.

Pronunciation

For pronunciation, we split a word into syllable(s). A syllable usually contains one vowel sound, with or without surrounding consonants.

Consonants are sounds that are articulated with a complete or partial closure of the vocal tract. It can be voiced or voiceless.

Vowels are voiced sounds.

Graphemes / Phonemes

Not a one-to-one relation between written words and phones.

Some graphemes are associated to multiple phonemes.

Phones

Phones are the acoustic realization of phonemes.

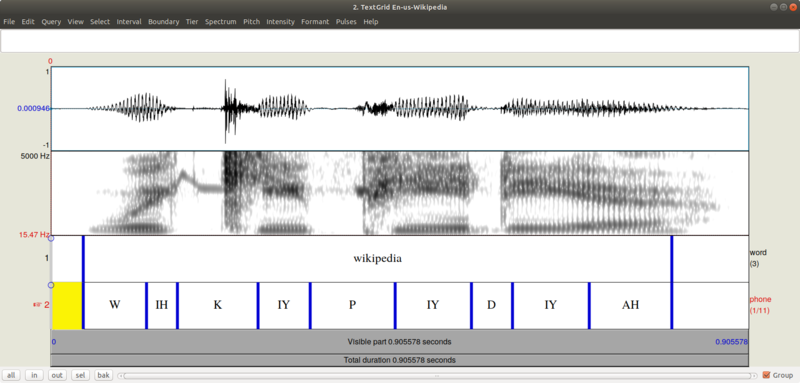

The first half of the diagram is the audio for the fricative consonant /sh/. It is clearly different from the vowel after it.

Speech recognition also classifies some acoustic signals.

Feature Extraction

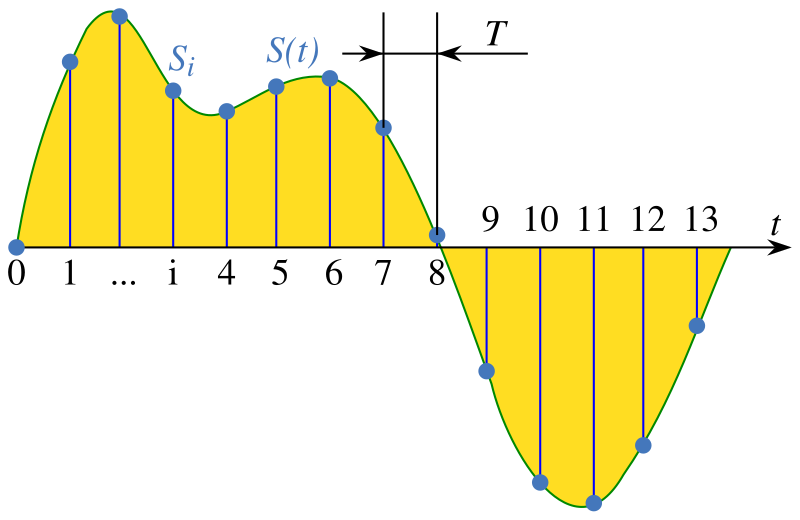

Sampling

In signal processing, sampling is the reduction of a continuous-time signal to a discrete-time signal.

Speech signals can usually be sampled at a much lower rate. For most phonemes, almost all of the energy is contained in the 100 Hz-4 kHz range, allowing a sampling rate of 8 kHz.

Analyzing the sound wave

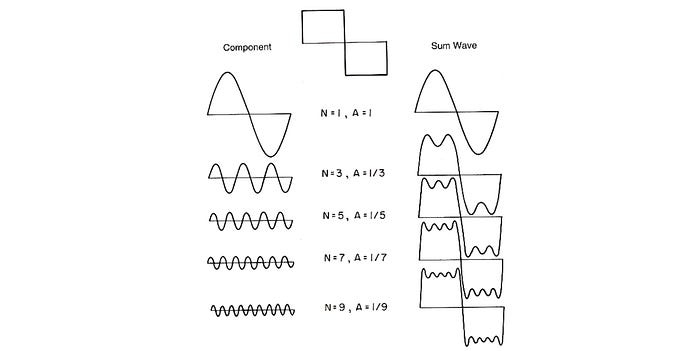

In speech recognition, we want to go from time domain to frequency domain. We apply the Fourier transformation for this.

Spectrograms

We can use spectrograms to see the frequency energy distribution of vowels and different phones.

MFCC

Mel-Frequency Cepstrum Coefficients

Mel Scale

Perceptually relevant scale - closer to human's perception. Tries to represent how humans perceive frequencies.

Cepstrum Coefficients

Use spectral envelope to find formants which carry identity of sound, or vocal tract frequency responses.

MFCC uses discrete cosine transform instead of fourier transform to obtain the coefficients.

Steps

- Waveform, Time domain

- DFT, Frequency domain - obtain spectrograms

- Log-Amplitude Spectrum, Log of Power - Decibles - less variation between high and low energy

- Mel-Scaling - Perceptually relevant scaling on frequencies

- Discrete Cosine Transform - Extraction of spectral envelop to create a Cepstrum

- MFCCs - Take first 12 coefficients (slower variations)

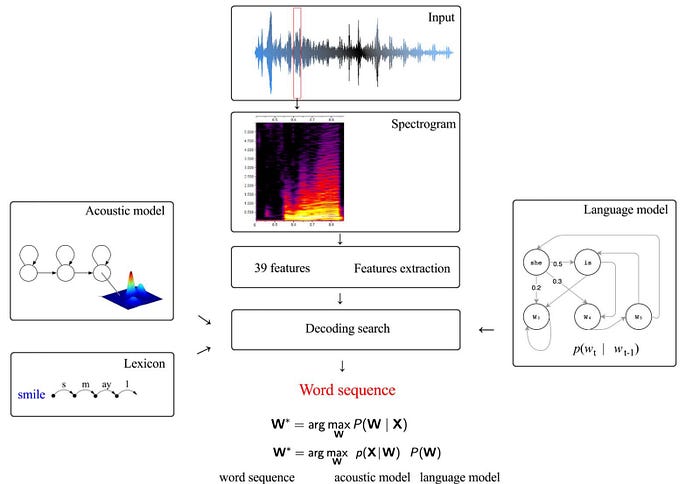

39 features = 39 MFCCs per frame

12 parameters are related to the amplitude of frequencies. 1 parameter is the energy of the signal. 13 more for derivatives. 13 more for second derivatives.

Features used in:

- Speech recognition

- Mood classification (sentiment analysis)

- Music genre classification

Acoustic-phonetic model

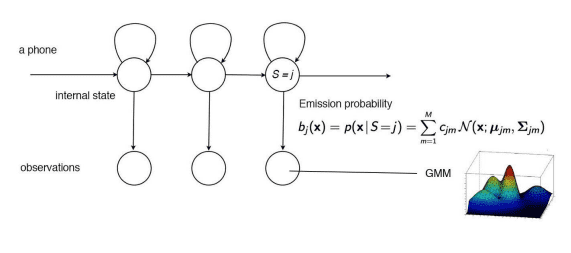

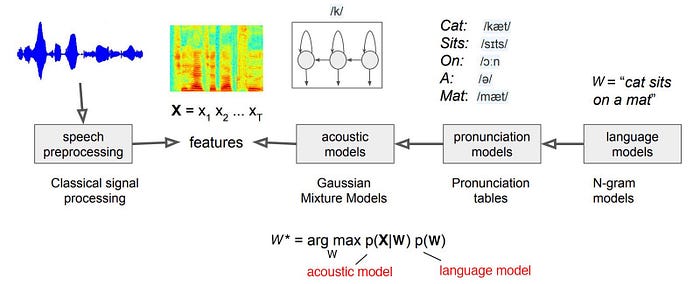

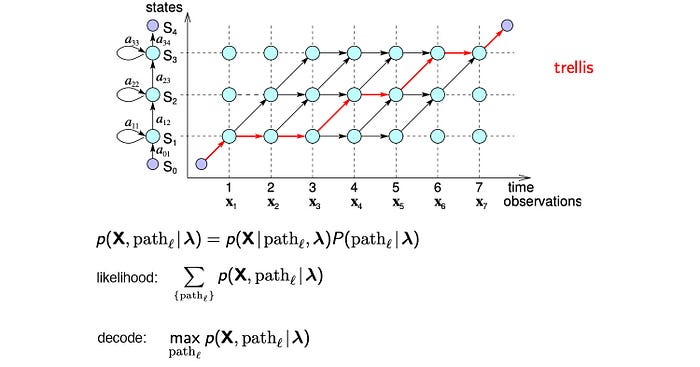

We capture pronunciation lexicons using hidden markov models. We have states for each phonemes, and we try to predict using a probabilistic model the acoustic signals.

In speech recognition, we have collected corpora which are phonetically transcribed and time-aligned. (the start and the end time of each phone are marked.) TIMIT is one popular corpus that contains utterances from 630 North American speakers.

Gaussian Mixture Model (GMM)

Single gaussian is too simplistic and does not capture enough variations. There would be a mixture model for each dimension, for each phoneme.

In summary here is an overview of the Acoustic-Phonetic model:

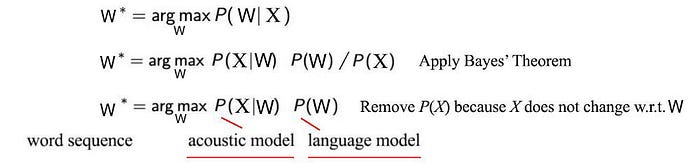

Traditional ASR

Architecture of traditional automatic speech recognition

Training and Testing

Generative model:

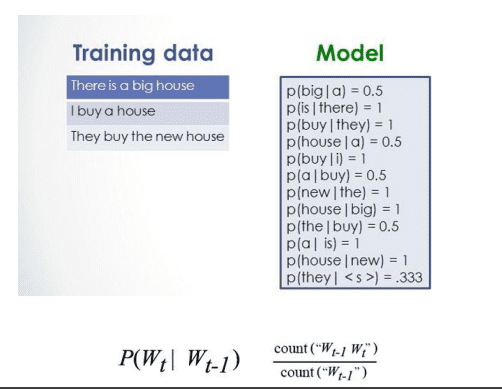

Training a bigram model:

Estimating the probability of each word:

This is known as the Viterbi algorithm. It tries to find the most likely path for a word.

Neural Network End-to-End Models

Evaluation

Output Examples

Target: To illustrate the point a prominent middle east analist in washington recounts a call from one campaign

Output: Two alstrait the point a prominent midille east analyst im washington recouncacall from one campaign

Target: T.W.A. also plans to hang its boutique shingle in airports at Lambert Saint

Output: T.W.A. also plans tohing its bootik single in airports at Lambert Saint

Target: All the equity raising in Milan gave that stock market indigestion last year

Output: All the equity raison in Mulong gave the that stackr market in to justian last year

Word Error Rate

where:

- , substitutions

- , insertions

- , deletions

- , number of words in the reference

Evaluation Strategy

- Choose a test sample

- Create reference transcripts

- Run a test

- Create ASR hypothesis transcripts

- Calculate WER

- Make an assessment

Attention to results provided. WER does not tell you what caused the problems ... and might be very OPTIMISTIC.

Were those results obtained in conditions that match YOUR intended use?

- Speaker

- Who is speaking?

- Which languages?

- Utterance

- Text reading (continuous) or Spontaneous

- Discourse Content

- Commands?

- Specific domain?

- Environment

- Noise conditions

- Channel (microphone, phone)

Deep Learning

Many components make up a complete speech application:

- speech transcription

- word spotting / trigger word

- speaker identification / verification

Traditional ASR

Traditional ASR components:

audio wave

feature representation

decoder

- acoustic model

- language model

- pronunciation model

Traditional pipeline is highly tweak-able, but also hard to get working well.

Historically, each part of system has own set of challenges.

- E.g., choosing feature representation.

DL speech pipeline walkthrough

Starter code: github.com/baidu-research/ba-dls-deepspeech

Preprocessing

Raw audio can be broken down into a 1D vector.

Two ways to start:

- minimally pre-process (simple spectrogram)

- Train model from raw audio wave

Spectrograms

- Take a small window (e.g. 20 ms) of Waveform

- compute FFT and take magnitude

- describes frequency content in local window

- concatenate frames from adjacent windows to form "spectrogram"

Acoustic Model

Goal: create a neural network (DNN/RNN) from which we can extract transcription, y.

- Train from labeled (x,y*)

Main issue: length (x) != length(y)

- Don't know how symbols in y map to frames of audio.

Multiple ways to resolve:

- use attention, sequence to sequence models

- use Connectionist Temporal Classification (CTC)

CTC

Basic idea:

- RNN output neurons encode distribution over symbols. Note length(c) == length(x)

- For phoneme-based model:

- For grapheme-based model:

Output neurons define distribution over whole character sequences assuming independence:

- Define a mapping

Given a specific character sequence , squeeze out duplicates + blanks to yield transcription

- Maximize likelihood of under this model

Update network parameters to maximize likelihood of correct label

Use usual gradient descent methods to optimize. Tune entire network with backpropagation.

Training

Getting RNN to train well is tricky.

Two tricks:

- "SortaGrad": order utterances by length during first epoch

- Batch normalization

Decoding & language models

Network outputs . How do we find most likely transcription from ?

Using approximation is a bad solution, but a useful diagnostic to "eyeball" models.

No efficient solution in general. Resort to search!

Language models

Even with better decoding, CTC model tends to make spelling + linguistic errors.

modeled directly from audio.

- but not enough audio data to learn complicated spelling and grammatical structure

- only supports small vocabulary

- for grapheme models: "Tchaikovsky" problem

Two solutions:

- fuse acoustic model with language model

- incorporate linguistic data

- predict phonemes + pronunciation lexicon + LM

Possible to train language model from massive text corpora

- learn spelling + grammar

- greatly expand vocabulary

- elevate likely cases over unlikely cases

Standard approach: n-gram models

- simple n-gram models are common and well supported

- train easily from huge corpora

- quickly update to follow trends in traffic

- fast lookups inside decoding algorithms

Given a word-based LM of form \\\\\\\mathrm{arg max} P(w|x)P(w)^{\alpha}[length(w)]^{\beta}$

for characters that make up .

and are tunable parameters to govern weight of LM and bonus/penalty for each word.

Basic strategy, use beam search to maximize $\mathrm{arg max} P(w|x)P(w)^{\alpha}[length(w)]^{\beta}$.

Start with set of candidate transcript prefixes, A={}.

For t=1..T:For each candidate in A, consider:1. Add blank: don't change prefix; update probability using AM2. Add space to prefix; update probability using LM3. Add a character to prefix; update probability using AMAdd new candidates with updated probabilities to $A_{new}$.A := most probable prefixes in $A_{new}$

Rescoring

Another place to plug in DL algorithms:

- System usually produce N-best list.

- Use fancier models to "rescore" this list

Scaling up

Transcribing speech data isn't cheap, but not prohibitive:

- roughly 50 cents to $1 per minute

Typical speech benchmarks offer 100s to few 1000s of hours.

Types of speech data

Application matters, we want to find data that matches our goals

- Styles of speech

- read

- conversational

- spontaneous

- command/control

- issues

- Disfluency / stuttering

- Noise

- Mic quality / #channels

- Far field

- Reverb / echo

- Lombard effect

- Speaker accents

- applications

- dictation

- meeting transcription

- call centers

- device control

- mobile texting

- home/IoT/Cars

Read speech is inexpensive way to get more data, but isn't that effective.

Augmentation

You can use a sound processor to simulate different environments and get different types of speech data.

Its easier to engineer data pipeline than to engineer recognition pipeline.

Computation

Computation takes a lot of time and lots of computing power. Solution is to use more GPUs with data parallelism.

Production

So far:

- train acoustic + language models

- scale them up

How to serve users?

- accuracy is only one measure of performance

- user care about latency

- need to serve economically